High-End Varnish - 275 thousand requests per second.

Posted on 2010-10-23

Varnish is known to be quite fast. But how fast? My very first Varnish-job was to design a stress testing scheme, and I did so. But it was never really able to push things to the absolute max. Because Varnish is quite fast.

In previous posts I've written I about hitting 27k requests per second on an aging Opteron (see http://kly.no/posts/2010_01_13__Pushing_Varnish_even_further__.html).

Recently, we were going to do a stress test at a customer setup before putting it live. The setup consisted of two dual Xeon x5670 machines. The X5670 is a 2.93GHz six-core cpu with hyperthreading, giving these machines 12 cpu-cores and 24 cpu threads. Quite fast. During our tests, I discovered some httperf secrets (sigh...). And was able to push things quite far. This is what we learned.

The hardware and software

As described above, we only had two machines for the test. One is the target and one would be the originating machine. The network was gigabit.

Varnish 2.1.3 on 64bit Linux.

Httperf for client load.

The different setups to be tested

Our goal was not to reach the maximum limits of Varnish, but to ensure the site was ready for production. That's quite tricky on many accounts.

The machines were originally configured with heartbeat and haproxy.

One test I'm quite fond of is site traversal while hitting a "hot" set at the same time. The intention is to test how your site fares if a ruthless search bot hits your site. Does your front page slow down? As far as Varnish goes, it tests the LRU-capabilities and how it deals with possibly overloaded backend servers.

We also switched out haproxy in favor of a dual-varnish setup. Why? Two reasons: 1. Our expertise is within the realm of Varnish. 2. Varnish is fast and does keep-alive.

When testing a product like Varnish we also have to take the balance between requests and connections into account. You'll see shortly that this is very important.

During our tests, we also finally got httperf to stress the threading model of Varnish. With a tool like siege, concurrency is defined by the threading level. That's not the case with httperf, and we were able to do several thousand _concurrent_ connections.

As the test progressed, we reduced the size of the content and it became more theoretical in nature.

As the specifics of the backends is not that relevant, I'll keep to the Varnish-specific bits for now.

I ended up using a 301 redirect as a test this time. Mostly because it was there. Towards the end, I had to remove various varnish-headers to free up bandwidth.

Possible bottlenecks

The most obvious bottleneck during a test like this is bandwidth. That is the main reason for reducing the size of objects served during testing.

An other bottleneck is how fast your web servers are. A realistic test requires cache misses, and cache misses requires responsive web servers.

Slow clients are a problem too. Unfortunately testing that synthetically isn't easy. Lack of test-clients has been an issue in the past, but we've solved this now.

CPU? Traditionally, the cpu-speed isn't much of an issue with Varnish, but when you rule out slow backends, bandwidth and slow clients, the cpu is the next barrier.

One thing that's important in this test is that the sheer amount of parallel execution threads is staggering. My last "big" test had 4 execution threads, this one has 24. This means we get to test contention points that only occur if you have massive parallelization. The most obvious bottleneck is the acceptor-thread. The thread charged with accepting connections and delegating them to a thread. Even if multiple thread pools is designed to leverage this problem, the actual accept()-call is done in a single thread of execution.

Connections

As Artur Bergman of Wikia has already demonstrated, the amount of TCP connections Varnish is able to accept per second is currently our biggest bottleneck. Fortunately for most users, Artur's work-load is very different from most other Varnish users. We (Varnish Software) typically see a 1:10 ration between connections and requests. Artur suggested he's closer to 1:3 or 1:4.

During this round of tests I was easily able to reach about 40k connections/s. However, going much above that is hard. For a "normal" workload, that would allow 400k requests/second, which is more than enough. However, it should be noted that the accept-rate goes somewhat down as the general load increases.

It was interesting to note that this was largely unaffected by having two varnishes in front of each other. This essentially confirms that the acceptor is the bottleneck.

There wasn't much we could do to affect this limit either. Increasing the listen_depth isn't going to help you in a synthetic test. The listen_depth defines how many outstanding connections is allowed to queue up before the kernel starts dropping them. In the real world, the connection-rate will be sporadic and on an almost-overloaded system, it might help to increase the listen depth, but in a synthetic test the connection rate is close to constant. That means increasing the listen depth just means there's a bigger queue to fill - and it will fill anyway.

The number of thread pools had little effect too. By the time the connection is delegated to a thread pool, it's already past the accept() bottleneck.

Now, keep in mind that this is still a staggering number. But it's also an obvious bottleneck for us.

Request rate

The raw request rate is essentially defined by how big the request is compared to bandwidth, how much CPU power is available and how fast you can get the requests into a thread.

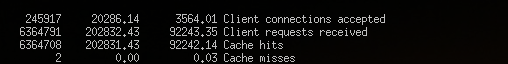

As we have already established that the acceptor-thread is a bottleneck, we needed to up the number of requests per connection. I tested mostly with a 1:10 ratio. This is the result of one such test:

The above image shows 202832 requests per second while doing roughly 20 000 connections/s. Quite a number.

It proved difficult to exceed this.

At about 226k req/s the bandwidth limit of 1gbit was easily hit. To reach that, I had to reduce the connection-rate somewhat. The main reason for that, I suspect, is increased latency when the network is saturated.

At this point, Varnish was not saturating the CPU. It still had 30-50% idle CPU power.

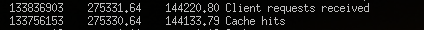

Just for kicks and giggles, I wanted to see how far we could really get, so I threw in a local httperf, thereby ignoring large parts of the network issue. This is a screenshot of Varnish serving roughly 1gbit traffic over network and a few hundred mbit locally:

So that's 275k requests/s. The connection rate at that point was lousy, so not very interesting. And because httperf was running locally, the load on the machine wasn't very predictable. Still, the machine was snappy.

But what about the varnish+varnish setup?

The above numbers are for a single Varnish server. However, when we tested with varnish as a load balancer in front of Varnish, the results were pretty identical - except divided by two.

It was fairly easy to do 100k requests/second on both the load balancer and the varnish server behind it - even though both were running on the same machine.

The good thing about Varnish as load balancer is the keep alive-nature, speed and flexibility. The contention-point of Varnish is long before any balancing is actually done, so you can have a ton of logic in your "Varnish Load balancer" without worrying about load increasing with complexity.

We did, however, discover that the number of HTTP header overflows would spike on the second varnish server. We're investigating this. The good news is that it was not visible on the user-side.

The next step

I am re-doing part of our internal test infrastructure (or rather: shifting it around a bit) to test the acceptor thread regularly.

I also discovered an assert issue during some sort of race at around 220k req/s, but that was only under certain very very specific situations. It was not possible to reproduce on anything that wasn't massively parallel and almost saturated on CPU.

We're also constantly improving our test routines both for customer setups and internal quality assurance on the Varnish code base. I've already written several load-generating scripts for httperf to allow us to test even more realistic work loads on a regular basis.

What YOU should care about

The only thing that made a real difference while tuning Varnish was the number of threads. And making sure it actually caches.

Beyond that, it really doesn't matter much. Our defaults are good.

However, keep in mind that this does NOT address what happens when you start hitting disk. That's a different matter entirely.